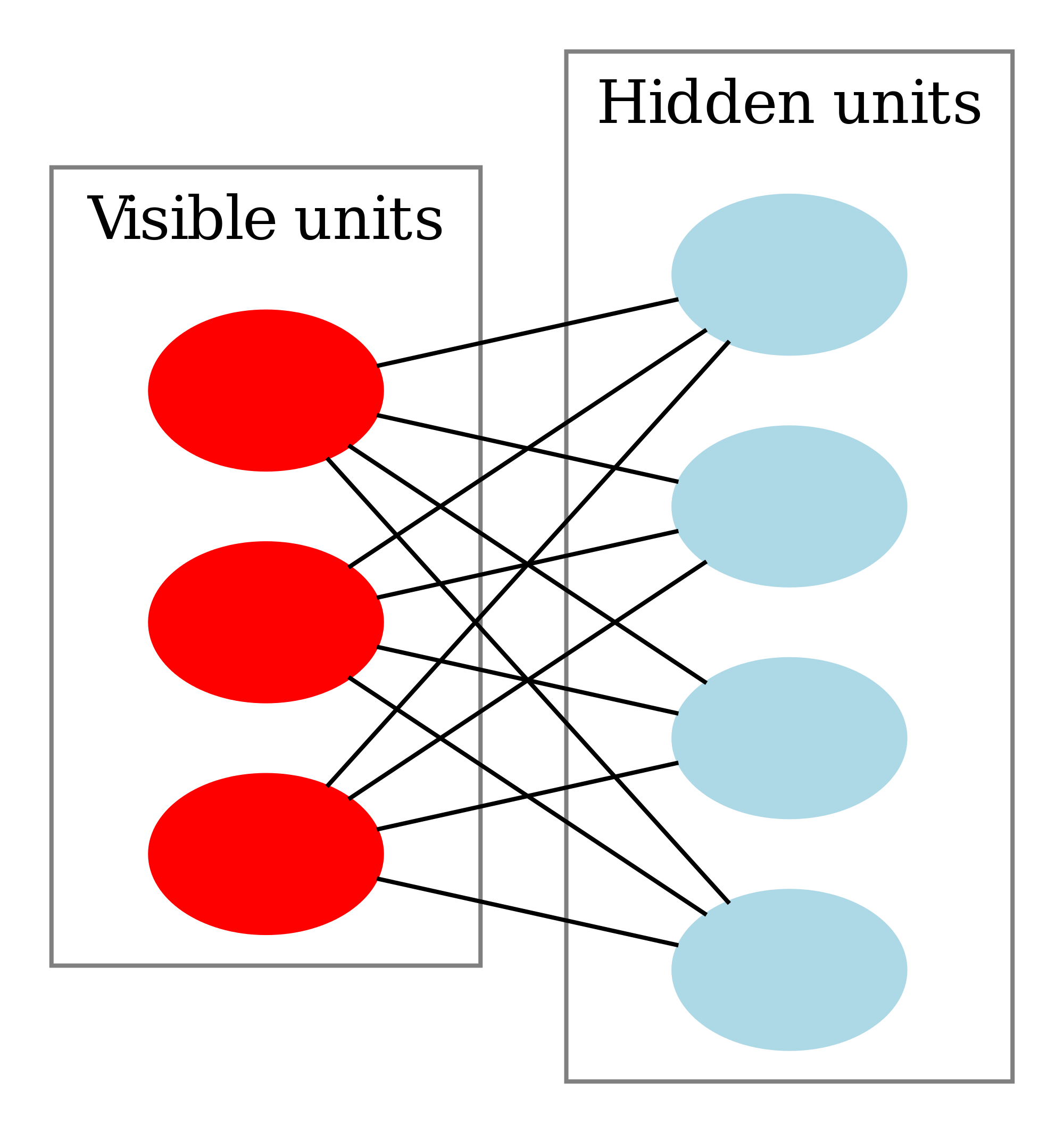

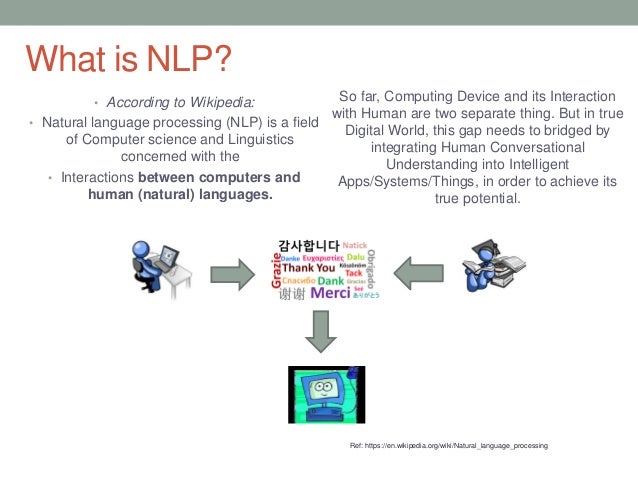

Such hand-crafted features are time-consuming and often incomplete.įigure 1: Percentage of deep learning papers in ACL, EMNLP, EACL, NAACL over the last 6 years (long papers).Ĭollobert et al. In contrast, traditional machine learning based NLP systems liaise heavily on hand-crafted features. Deep learning enables multi-level automatic feature representation learning. This trend is sparked by the success of word embeddings (Mikolov et al., 2010, 2013a) and deep learning methods ( Socher et al., 2013). In the last few years, neural networks based on dense vector representations have been producing superior results on various NLP tasks. For decades, machine learning approaches targeting NLP problems have been based on shallow models (e.g., SVM and logistic regression) trained on very high dimensional and sparse features. Following this trend, recent NLP research is now increasingly focusing on the use of new deep learning methods (see Figure 1). NLP enables computers to perform a wide range of natural language related tasks at all levels, ranging from parsing and part-of-speech (POS) tagging, to machine translation and dialogue systems.ĭeep learning architectures and algorithms have already made impressive advances in fields such as computer vision and pattern recognition. NLP research has evolved from the era of punch cards and batch processing, in which the analysis of a sentence could take up to 7 minutes, to the era of Google and the likes of it, in which millions of webpages can be processed in less than a second ( Cambria and White, 2014). Natural language processing (NLP) is a theory-motivated range of computational techniques for the automatic analysis and representation of human language.

Or you can make suggestions by submitting a new issue. Refer to the issue section of the GitHub repository to learn more about how you can help. There are various ways to contribute to this project. Performance of Different Models on Different NLP Tasks.Unsupervised sentence representation learning.Reinforcement learning for sequence generation.Deep Reinforced Models and Deep Unsupervised Learning.Parallelized Attention: The Transformer.A collaborative project where expert researchers can suggest changes (e.g., incorporate SOTA results) based on their recent findings and experimental results.Create a friendly and open resource to help guide researchers and anyone interested to learn about modern techniques applied to NLP.

This project contains an overview of recent trends in deep learning based natural language processing (NLP).

0 kommentar(er)

0 kommentar(er)